NeuroMANCER

NeuroMANCER v1.4.2

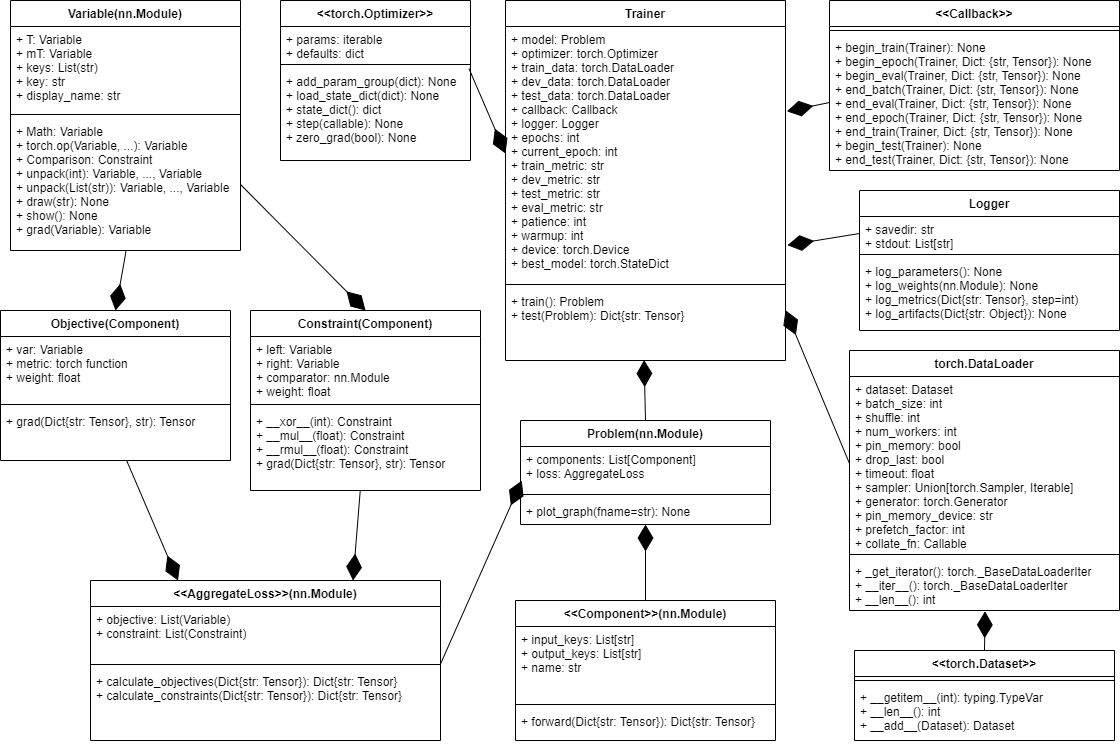

Neural Modules with Adaptive Nonlinear Constraints and Efficient Regularizations (NeuroMANCER) is an open-source differentiable programming (DP) library for solving parametric constrained optimization problems, physics-informed system identification, and parametric model-based optimal control. NeuroMANCER is written in [PyTorch](https://pytorch.org/) and allows for systematic integration of machine learning with scientific computing for creating end-to-end differentiable models and algorithms embedded with prior knowledge and physics.

Pip Installation (Recommended)

Now available on PyPI!

pip install neuromancer

Features and Examples

Extensive set of tutorials can be found in the (https://github.com/pnnl/neuromancer/tree/master/examples) folder. Interactive notebook versions of examples are available on Google Colab! Test out NeuroMANCER functionality before cloning the repository and setting up an environment.

Introduction to NeuroMANCER

Part 1: Linear regression in PyTorch vs NeuroMANCER.

Part 2: NeuroMANCER syntax tutorial: variables, constraints, and objectives.

Part 3: NeuroMANCER syntax tutorial: modules, Node, and System class.

Parametric Programming

Part 1: Learning to solve a constrained optimization problem.

Part 2: Learning to solve a quadratically-constrained optimization problem.

Part 3: Learning to solve a set of 2D constrained optimization problems.

Part 4: Learning to solve a constrained optimization problem with projected gradient method.

Ordinary Differential Equations (ODEs)

Part 5: Neural State Space Models (NSSMs) with exogenous inputs

Part 6: Data-driven modeling of resistance-capacitance (RC) network ODEs

### Physics-Informed Neural Networks (PINNs) for Partial Differential Equations (PDEs)

Here are the converted lines for the provided examples:

### Control

Additional Documentation

Additional documentation for the library can be found in the pdf form. There is also an introduction video covering core features of the library.

Getting Started

Below is a Neuromancer syntax example for differentiable parametric programming

import neuromancer as nm

# primal solution map to be trained

func = nm.blocks.MLP(insize=2, outsize=2, hsizes=[80] * 4)

sol_map = nm.maps.Map(func,

input_keys=["a", "p"],

output_keys=["x"],

name='primal_map')

# problem primal variables

x = nm.constraints.variable("x")[:, [0]]

y = nm.constraints.variable("x")[:, [1]]

# sampled problem parameters

p = nm.constraints.variable('p')

a = nm.constraints.variable('a')

# nonlinear objective function

f = (1-x)**2 + a*(y-x**2)**2

obj = f.minimize(weight=1., name='obj')

# constraints

con_1 = 100*(x >= y)

con_2 = 100*((p/2)**2 <= x**2+y**2)

con_3 = 100*(x**2+y**2 <= p**2)

# create constrained optimization loss

objectives = [obj]

constraints = [con_1, con_2, con_3]

loss = nm.loss.PenaltyLoss(objectives, constraints)

# construct constrained optimization problem

components = [sol_map]

problem = nm.problem.Problem(components, loss)

Examples

For detailed examples of NeuroMANCER usage for control, system identification, and parametric programming as well as tutorials for basic usage, see the scripts in the examples folder.

Community

We welcome contributions and feedback from the open-source community!

Contributing examples

If you have an example of using NeuroMANCER to solve an interesting problem, or of using NeuroMANCER in a unique way, we would love to see it incorporated into our current library of examples. To submit an example, create a folder for your example/s in the example folder if there isn’t currently and applicable folder and place either your executable python file or notebook file there. Push your code back to github and then submit a pull request. Please make sure to note in a comment at the top of your code if there are additional dependencies to run your example and how to install those dependencies.

Contributing code

We welcome contributions to NeuroMANCER. Please accompany contributions with some lightweight unit tests via pytest (see test/ folder for some examples of easy to compose unit tests using pytest). In addition to unit tests a script utilizing introduced new classes or modules should be placed in the examples folder. To contribute a new feature please submit a pull request.

Reporting issues or bugs

If you find a bug in the code or want to request a new feature, please open an issue.

NeuroMANCER development plan

Here are some upcoming features we plan to develop. Please let us know if you would like to get involved and contribute so we may be able to coordinate on development. If there is a feature that you think would be highly valuable but not included below, please open an issue and let us know your thoughts.

Faster dynamics modeling via Torchscript

Control and modelling for networked systems

Easy to implement modeling and control with uncertainty quantification

Online learning examples

Benchmark examples of DPC compared to deep RL

Conda and pip package distribution

CVXPY-like interface for optimization via Problem.solve method

More versatile and simplified time series dataloading

Pytorch Lightning trainer compatibility

Publications

Cite as

@article{Neuromancer2022,

title={{NeuroMANCER: Neural Modules with Adaptive Nonlinear Constraints and Efficient Regularizations}},

author={Tuor, Aaron and Drgona, Jan and Skomski, Mia and Koch, James and Chen, Zhao and Dernbach, Stefan and Legaard, Christian Møldrup and Vrabie, Draguna},

Url= {https://github.com/pnnl/neuromancer},

year={2022}

}

Authors: Authors: Aaron Tuor, Jan Drgona, Mia Skomski, Stefan Dernbach, James Koch, Zhao Chen, Christian Møldrup Legaard, Draguna Vrabie, Madelyn Shapiro

Acknowledgements

This research was partially supported by the Mathematics for Artificial Reasoning in Science (MARS) and Data Model Convergence (DMC) initiatives via the Laboratory Directed Research and Development (LDRD) investments at Pacific Northwest National Laboratory (PNNL), by the U.S. Department of Energy, through the Office of Advanced Scientific Computing Research’s “Data-Driven Decision Control for Complex Systems (DnC2S)” project, and through the Energy Efficiency and Renewable Energy, Building Technologies Office under the “Dynamic decarbonization through autonomous physics-centric deep learning and optimization of building operations” and the “Advancing Market-Ready Building Energy Management by Cost-Effective Differentiable Predictive Control” projects. PNNL is a multi-program national laboratory operated for the U.S. Department of Energy (DOE) by Battelle Memorial Institute under Contract No. DE-AC05-76RL0-1830.

Welcome to My Project’s Documentation

- neuromancer package

- Subpackages

- neuromancer.dynamics package

- neuromancer.modules package

- neuromancer.psl package

- Submodules

- neuromancer.psl.autonomous module

- neuromancer.psl.base module

- neuromancer.psl.building_envelope module

- neuromancer.psl.coupled_systems module

- neuromancer.psl.file_emulator module

- neuromancer.psl.gym module

- neuromancer.psl.nonautonomous module

- neuromancer.psl.norms module

- neuromancer.psl.perturb module

- neuromancer.psl.plot module

- neuromancer.psl.signals module

- neuromancer.psl.system_emulator module

- Module contents

- neuromancer.slim package

- Submodules

- neuromancer.arg module

- neuromancer.callbacks module

- neuromancer.constraint module

- neuromancer.dataset module

DictDatasetGraphDatasetSequenceDatasetStaticDatasetbatch_tensor()denormalize_01()denormalize_11()destandardize()get_sequence_dataloaders()get_static_dataloaders()normalize_01()normalize_11()normalize_data()read_file()split_sequence_data()split_static_data()standardize()unbatch_tensor()

- neuromancer.gradients module

- neuromancer.loggers module

- neuromancer.loss module

- neuromancer.plot module

AnimatorVisualizerVisualizerClosedLoopVisualizerDobleIntegratorVisualizerOpenVisualizerTrajectoriesVisualizerUncertaintyOpencl_simulate()get_colors()plot_cl()plot_cl_train()plot_loss_DPC()plot_loss_mpp()plot_matrices()plot_model_graph()plot_policy()plot_policy_train()plot_solution_mpp()plot_traj()plot_trajectories()pltCL()pltCorrelate()pltOL()pltPhase()pltRecurrence()trajectory_movie()

- neuromancer.problem module

- neuromancer.system module

- neuromancer.trainer module

- Module contents

- Subpackages