RAIEv¶

Responsible AI Evaluation¶

RAIEV, the (R)esponsible (AI) (Ev)aluation package contains protyping of AI-assisted evaluation workflows and interactive analytics for model evaluation and understanding.

GitHub Repo: https://github.com/pnnl/RAIEV

Workflow Categories¶

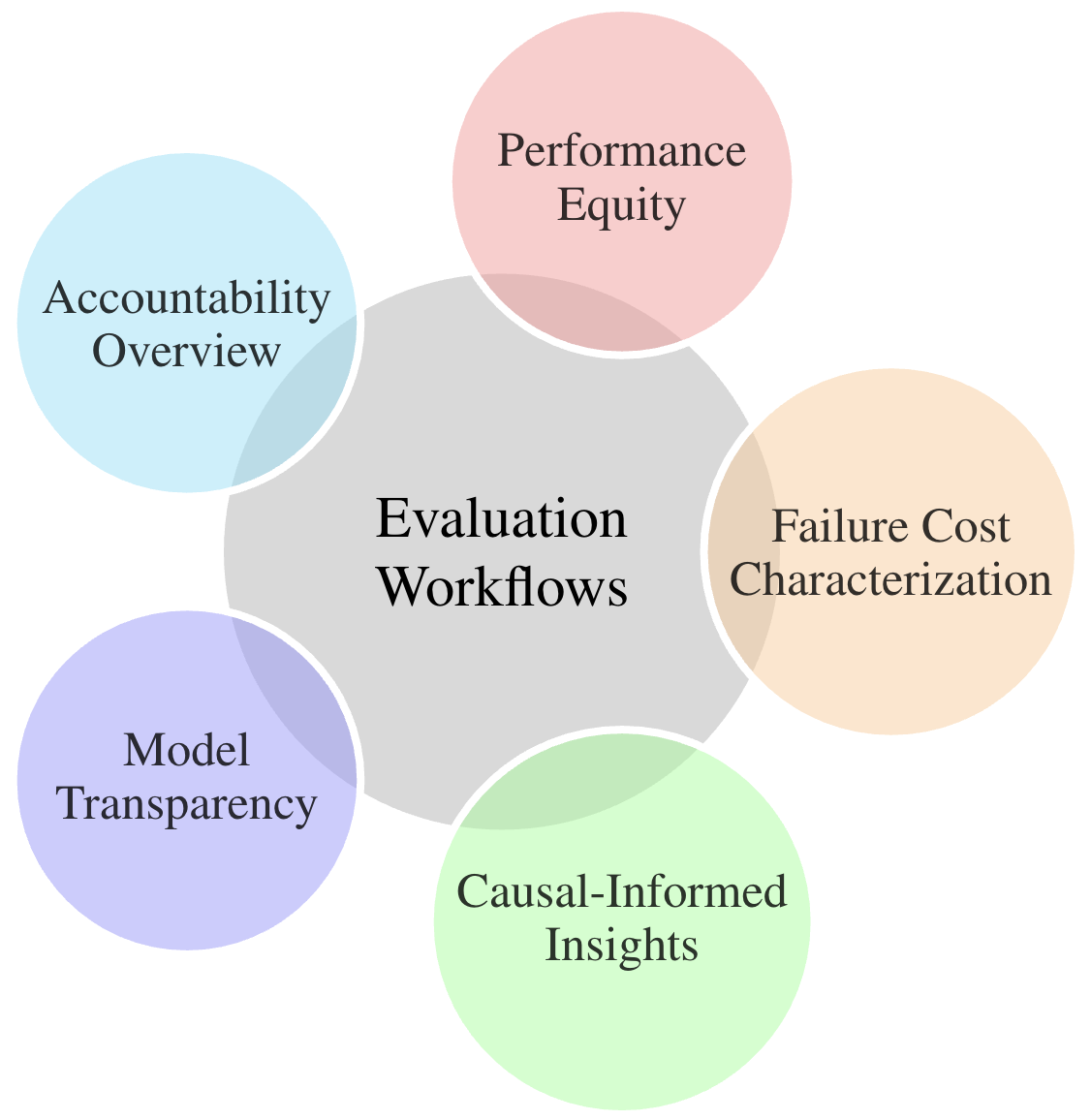

RAIEv organizes workflows into 5 categories:

Accountability Overview evaluations capture aggregate performance (leveraged in traditional evaluations) and targeted evaluations of model performance across critical subsets or circumstances.

Aggregate metric summary (as used in traditional evaluations)

Global sensitivity analyses

Performance Equity evaluations provide insight on whether there is an equitable reliability and robustness across circumstances in which models perform (e.g., specific locations) as well as characteristic-based groupings of model inputs (e.g., variants of inputs like long vs short documents).

Faceted metrics across key circumstances

Identification of significant performance variation

Relative comparisons across key circumstances identifying circumstances or subsets with significant differences in model success/failure/confidence

Targeted sensitivity analyses

Failure Cost Characterization evaluations distinguish errors based on failure costs.

Highlighting prevalence of high impact errors and their associated downstream costs (e.g., introduction of noise vs. reduction of recall, selection of incorrect intervention, etc.

Distinguishing between model errors that are high versus low confidence outputs

Characterizing the reliability of uncertainty (or confidence/probability) measure(s) provided by the model and alignment with correctness of model outputs, i.e., whether it would be a relevant measure for calibrating appropriate trust by end users.

Causal-Informed Insights workflows provide insight on model performance on unseen or underrepresented circumstances using causal discovery and inference methods.

Model Transparency evaluations outline exploratory error analysis workflows that expand on those described above for holistic understanding of model behavior based on input-output relationships introducing transparency (of varying degrees) to even black box systems.

This material was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor the United States Department of Energy, nor Battelle, nor any of their employees, nor any jurisdiction or organization that has cooperated in the development of these materials, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness or any information, apparatus, product, software, or process disclosed, or represents that its use would not infringe privately owned rights.

Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof, or Battelle Memorial Institute. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof.

PACIFIC NORTHWEST NATIONAL LABORATORY

operated by

BATTELLE

for the

UNITED STATES DEPARTMENT OF ENERGY

under Contract DE-AC05-76RL01830

README¶

API Reference¶

Reference¶

Page Reference: